Using OpenDataSky API with AnythingLLM

AnythingLLM is a user-friendly and comprehensive AI application that enables Retrieval-Augmented Generation (RAG), AI agents, and other advanced capabilities without requiring coding or infrastructure setup. It supports local LLMs, customizable private environments, and provides a fully configurable, private, all-in-one AI solution.

Installing the AnythingLLM Client

Follow the installation guide to download and install the latest version of AnythingLLM.

Configuration

This guide uses AnythingLLM Desktop for Windows as an example.

Initial Setup

When first opening the client, click "Get Started" on the AnythingLLM welcome page to proceed.Configure LLM Preferences

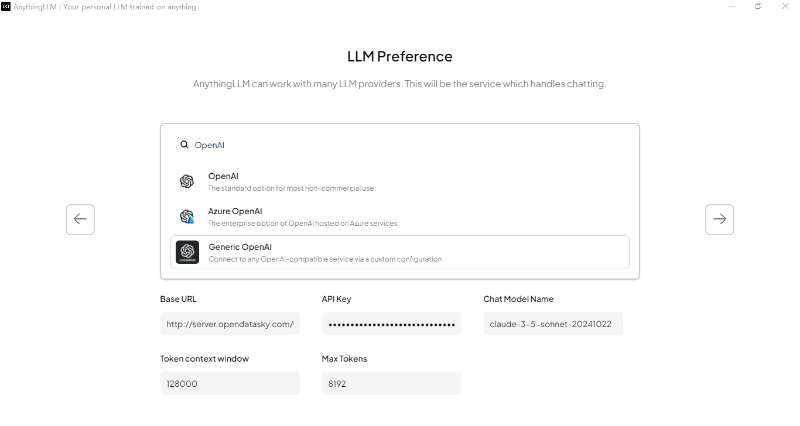

Navigate to the LLM Preference page to set up your desired text model.

- Model Provider: Select "Generic OpenAI".

- Base URL: Enter

http://server.opendatasky.com/v1/api/open-ai/ds. - API Key: Use your OpenDataSky account's API key. Retrieve it from the API Key Management page.

- Chat Model Name: Specify the text model you wish to use (refer to the Model List).

- Token Context Window: Set the context length (e.g.,

128000for 128k context if unsure). - Max Tokens: Define the maximum output length (e.g.,

1024).

Finalize Setup

Complete the onboarding process to begin using AnythingLLM with your configured models.

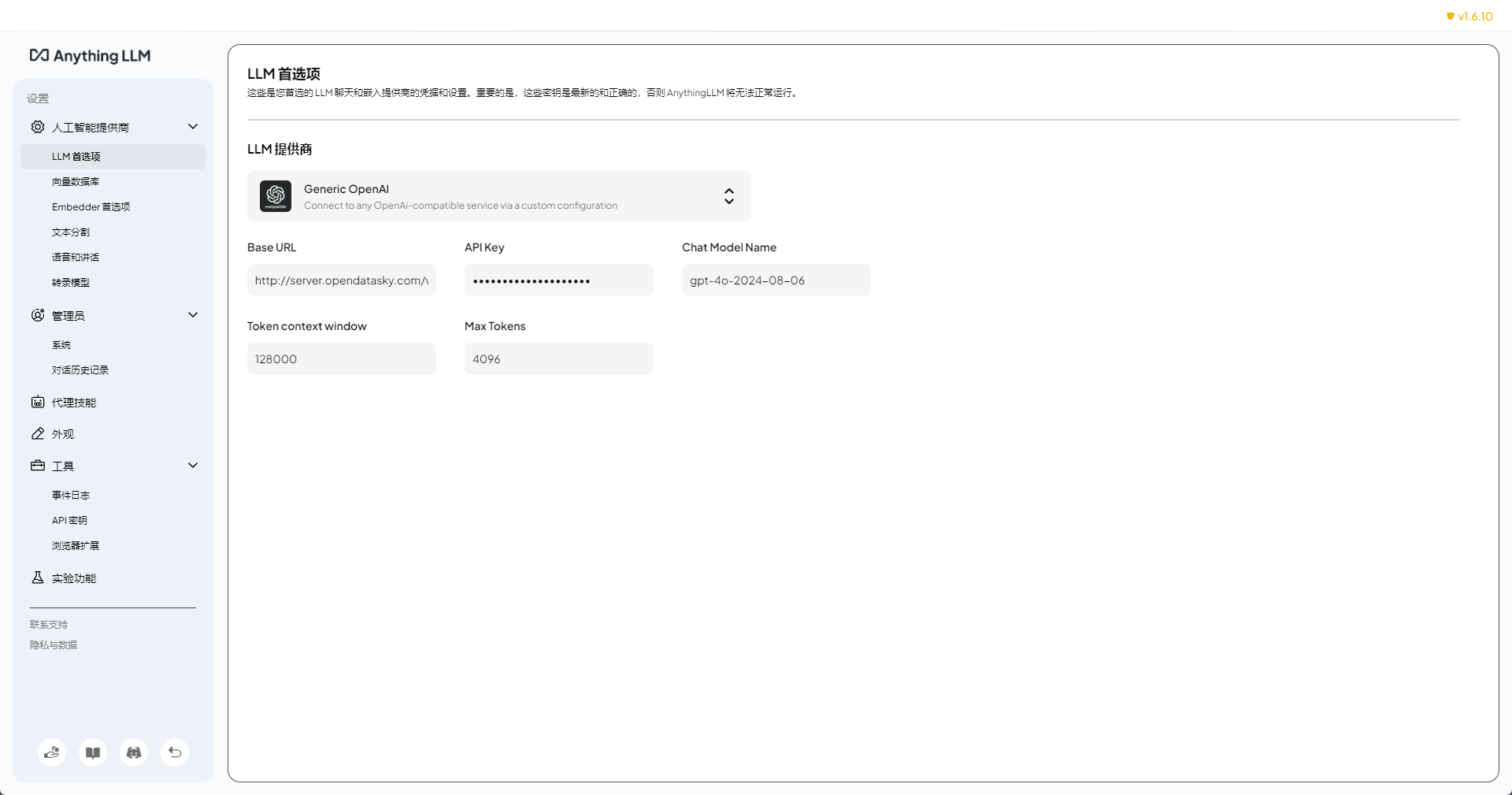

Modifying the Text Model

You can adjust text model settings at any time via the LLM Preferences in the settings menu:

- Update the Model Name, Token Context Window, or Max Tokens as needed.

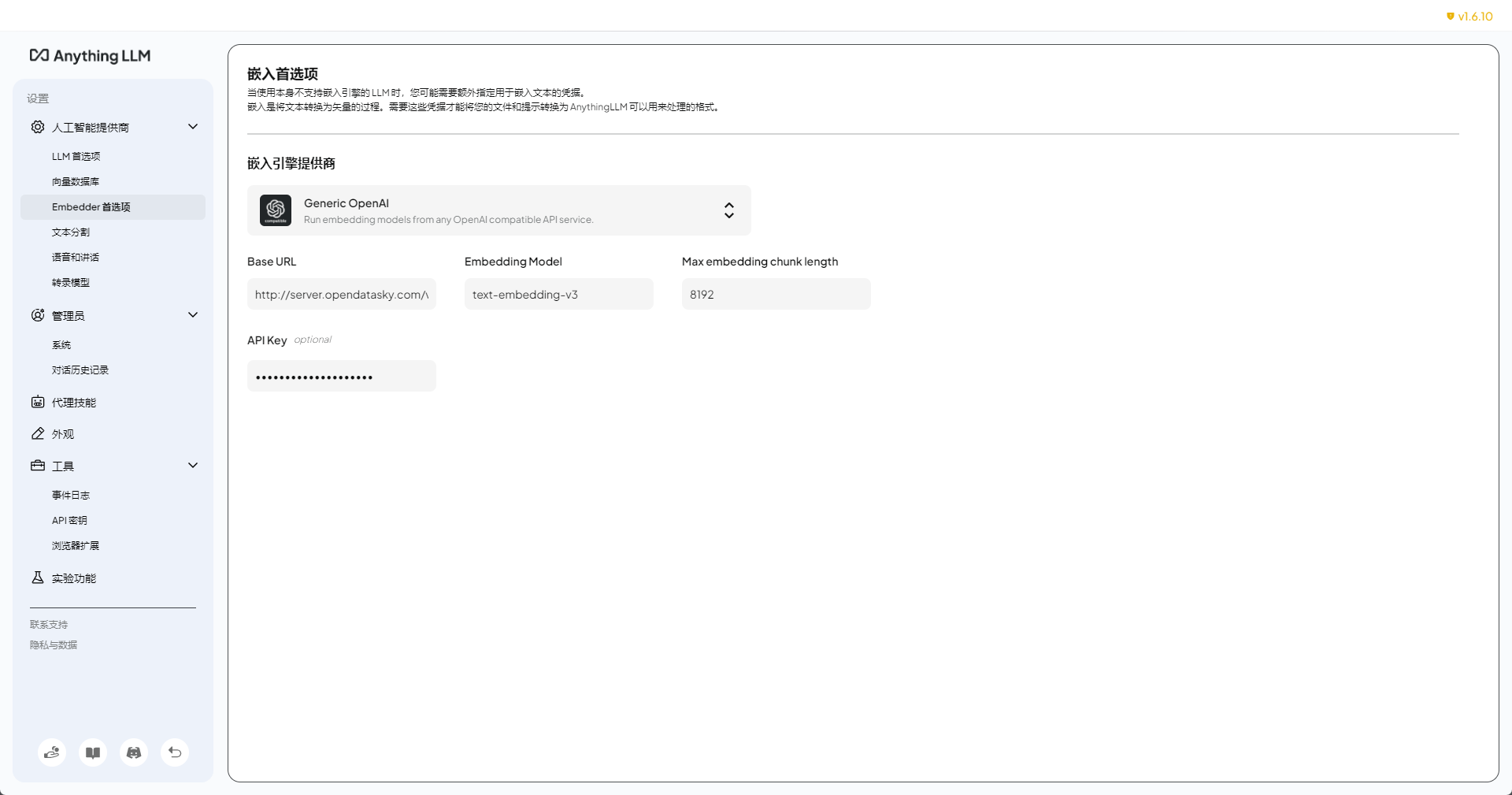

Configuring the Embedding Model

AnythingLLM includes a default local embedding model, but you can optionally use OpenDataSky's hosted embedding models for improved performance:

Access Embedder Settings

Navigate to Embedder Preferences in the settings menu.

Configure Parameters

- Embedding Engine Provider: Select "Generic OpenAI".

- Base URL: Enter

http://server.opendatasky.com/v1/api/open-ai/ds. - Embedding Model: Specify the desired embedding model (refer to the Model List).

- Max Embedding Chunk Length: Set an appropriate value (e.g.,

8192). - API Key: Re-enter your OpenDataSky API key from the API Key Management page.

Notes

- Ensure all model names and parameters align with the specifications in the Model List.

- For optimal performance, adjust context and token limits based on your selected model's documentation.

By following these steps, you can seamlessly integrate OpenDataSky's models into AnythingLLM for advanced AI workflows.